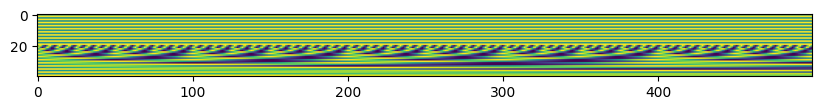

d_model = 40

a = torch.zeros((1, d_model, 16, 500))

l = PositionalEncoding2D(d_model=d_model, freq_factor=1_000)

l_pos = l(a)

print(l_pos.shape)torch.Size([1, 40, 16, 500])API Reference

DownBlock2D (in_ch, out_ch, kernel_size=2, stride=2, padding=0, use_conv=True)

A 2d down scale block.

UpBlock2D (in_ch, out_ch, kernel_size=2, stride=2, padding=0, use_conv=True)

A 2d up scale block.

ResBlock2D (in_ch, out_ch, kernel_size, skip=True, num_groups=32)

A 2d residual block.

ResBlock2DConditional (in_ch, out_ch, t_emb_size, kernel_size, skip=True)

A 2d residual block with input of a time-step \(t\) embedding.

FeedForward (in_ch, out_ch, inner_mult=1)

A small dense feed-forward network as used in transformers.

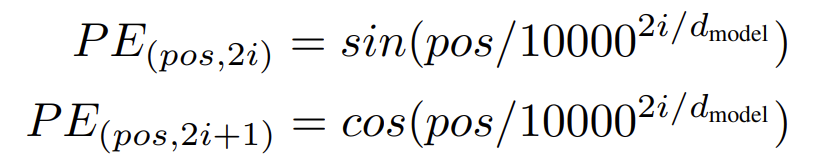

Create sinusoidal position embeddings, same as those from the transformer:

PositionalEncoding (d_model:int, dropout:float=0.0, max_len:int=5000, freq_factor:float=10000.0)

An absolute pos encoding layer.

TimeEmbedding (d_model:int, dropout:float=0.0, max_len:int=5000, freq_factor:float=10000.0)

A time embedding layer

PositionalEncodingTransposed (d_model:int, dropout:float=0.0, max_len:int=5000, freq_factor:float=10000.0)

An absolute pos encoding layer.

PositionalEncoding2D (d_model:int, dropout:float=0.0, max_len:int=5000, freq_factor:float=10000.0)

A 2D absolute pos encoding layer.

torch.Size([1, 40, 16, 500])